Dear Readers,

Despite first being coined in the 17th century, until very recently the term “algorithm” was obscure jargon rarely used outside the field of computer science. Today, discussion of algorithms is scarcely out of popular news headlines. Such is the increasing sway that algorithms exert over every aspect of modern life.

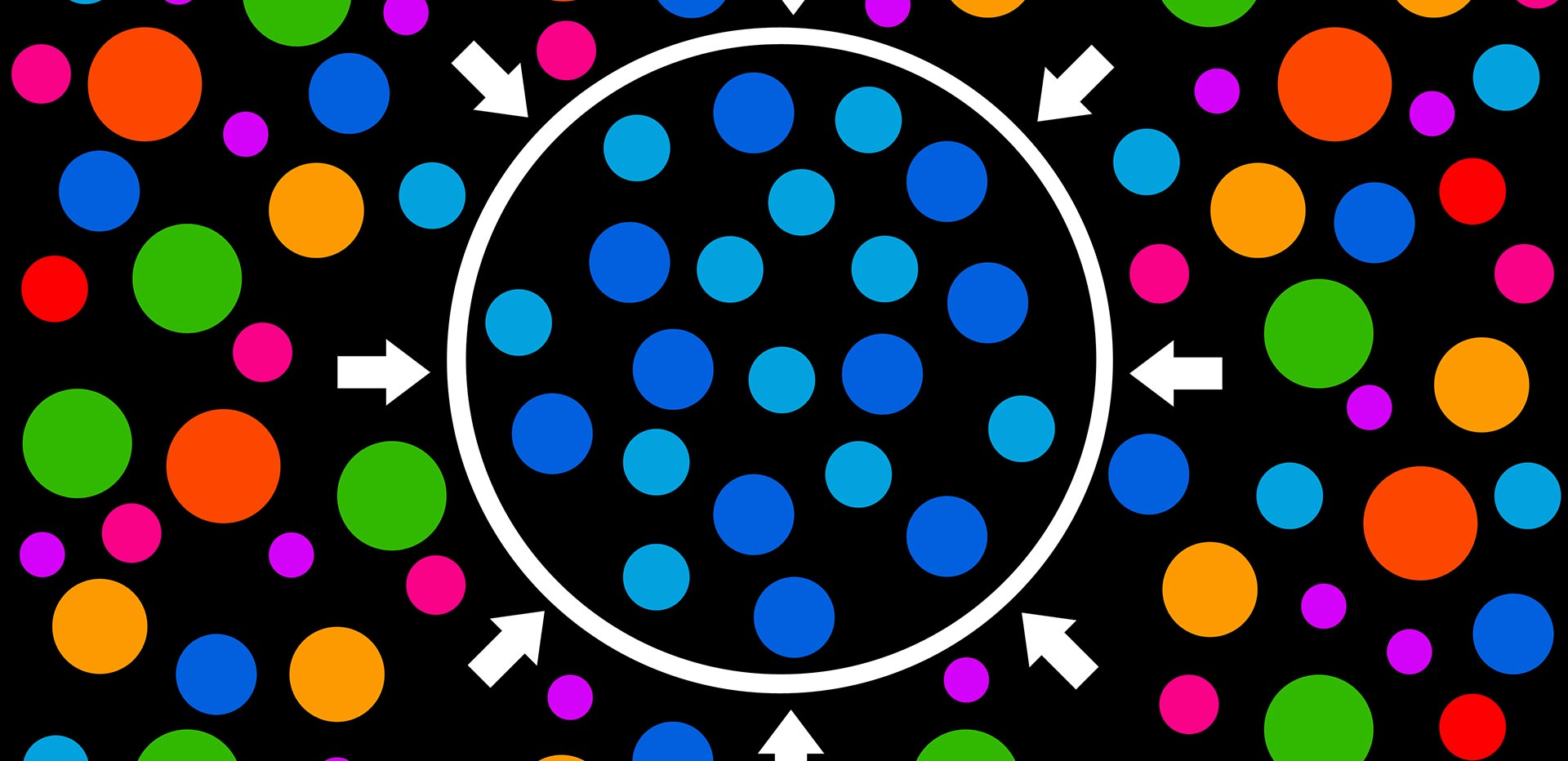

At its base, an algorithm is a neutral mechanism, defined as “a step-by-step procedure for solving a problem or accomplishing some end.” Leaving to one side the advanced algorithms used by the tech giants, the processes schoolchildren use to learn addition, subtraction, division, and multiplication are themselves “algorithms,” albeit implemented by pencil and paper. Nonetheless, the amplification of the power of algorithms by vast datasets and computational power risks their use to socially undesirable ends (either intentionally or unintentionally). This is typically the result of introducing a real or perceived bias into either the algorithm itself or the input data upon which it operates.

In the antitrust context, “algorithmic bias” has famously been invoked in cases accusing large tech companies of favoring their own products or services to the detriment of competitors. But this is the tip of the iceberg, as algorithms are embedded by definition into any automated process engaged in by commercial entities, and also increasingly influence aspects of the operation of public bodies (including, potentially, antitrust en

...THIS ARTICLE IS NOT AVAILABLE FOR IP ADDRESS 216.73.216.213

Please verify email or join us

to access premium content!